Docugami @ Hugging Face: Expanded Docugami Foundation Model Benchmark Datasets Released on Hugging Face Hub

Today, we are excited to release our expanded Docugami Foundation Model (DFM) Benchmark Datasets on the Hugging Face Hub in order to simplify their usage in the industry. Three weeks ago, we announced these two new benchmarks for models that create and label nodes in Business Document XML Knowledge Graphs, as well as results against those benchmarks that outperform OpenAI’s GPT-4 and Cohere’s Command model.

Larger Benchmark Datasets Released on the Hugging Face Hub

We have released the datasets for both CSL (Small Chunks) and CSL (Large Chunks) benchmarks on the Hugging Face Hub. Based on your initial feedback, this release significantly simplifies the process of accessing and experimenting with these datasets using the popular Hugging Face Datasets library.

In addition, we have significantly expanded the size of both benchmark datasets, now including more than 1k nodes in the eval set for both. More details on the dataset cards here:

- Contextual Semantic Labels for Small Chunks: CSL (Small Chunks) on Hugging Face Hub

- Contextual Semantic Labels for Large Chunks: CSL (Large Chunks) on Hugging Face Hub

These new larger datasets are simultaneously being released on our GitHub Repository along with our previously released eval code for these benchmarks. We look forward to your continued feedback and contributions.

Updated Results on Benchmarks

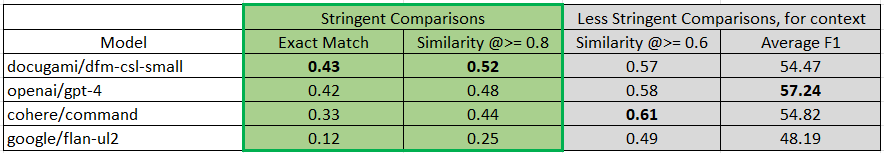

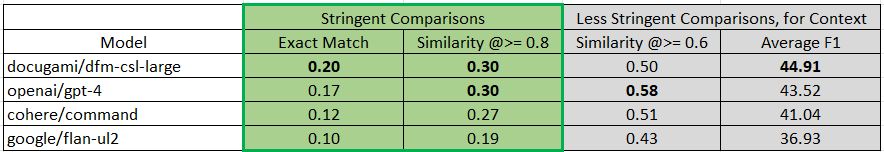

The Docugami Foundation Model (DFM) continues to outperform OpenAI’s GPT-4 and Cohere’s Command model on both benchmarks with these larger datasets.

Figure 1: Benchmark Results for CSL (Small Chunks)

Figure 2: Benchmark Results for CSL (Large Chunks)

Specifically, DFM outperforms on the more stringent comparisons i.e., exact match and similarity > 0.8 (which can be thought of as "almost exact match" in terms of semantic similarity). This means that Docugami’s output more closely matches human labels, either exactly or very closely.

For completeness and context, we included some other, less stringent metrics used in the industry, for example a token-wise F1 match. These less exact matches are less relevant in a business setting, where accuracy and completeness are critical. We previously released the code for these measurements on GitHub, and invite community feedback.

Stay Tuned for More

This is an exciting time to be part of the Docugami journey.

Docugami is available for anyone to use today, and we offer a developer API with free trials at docugami.com. We are continuously improving and engaging with the community, including our recently released integrations with LangChain and LlamaIndex. We also welcome discussions in our new Docugami Discord.

Today’s release of our expanded benchmark datasets on the Hugging Face Hub is an exciting step forward in our mission to transform documents into data, harnessing the power of AI for business documents. Stay tuned for more news to come!